Frontiers of Brain Science, day two

Our second day at the Kavli Science Journalism Workshop took us deeper into the mysteries of the brain. (Note that this post, as with the post on Day One, is quasi-transcription, not journalism.)

The brain creates special dilemmas for journalists; what could matter more for human existence than the brain itself? Yet during this two-day workshop it came out that most of what we know about how it works is speculation. When the specialists don’t understand things, it leads us to focus on their disagreements, which satisfies only those who like fights.

We got to see conflict starting with our first speaker, Rebecca Saxe, a star neuroscientist at MIT. She’s got a joyfulness about her research that makes her fun to listen to, and she’s young and energetic and sprinkled her talk with nice bits of trivia. As with faces, every brain is unique, she informed us, and she keeps a library of her graduate students’ brain scans. Cortex means ‘bark’ in Latin, which makes sense, though I hadn’t thought of the cortex as the bark of the brain. Saxe, though she looks cherubic, is darkly funny. “I tell my students, if they’re biking, to wear a helmet or a spike, because general brain damage is not useful, but specific brain damage is useful.”

That focus on specificity made Saxe the foil to our speaker at the end of day one, Lisa Barrett Feldman. Feldman argued that the brain does not have specific spots that deal with emotions. Saxe almost immediately began talking about how the amygdala was the center of emotional salience. Saxe called herself sympathetic to ‘essentialism,’ in this context the idea that a part of the brain is essential to a certain function, as is her teacher and mentor, Nancy Kanwisher. (see some of her talks on the brain here), who spends her research times looking at specific things the brain does.

In Saxe’s favor are some well-established aspects of the brain. We know that people who have suffered brain damage might lose just one function, the ability to use numbers, for instance. Saxe herself has been involved in research that places the right temporal parietal junction, the place just above the right ear where the right temporal and parietal lobes meet, as a source for theory of mind, our ability to recognize that we and others have minds (Saxe dubbed it “thinking about thoughts”).

“It is just the weirdest idea,” Saxe said, that theory of mind would have a specific place in the brain. But after 15 years of trying to disprove this idea, she grudgingly accepts it, and speculates that either thinking about what other people are thinking was so evolutionarily important that a region of the brain developed for it, or that we spend so much time doing it that, as with reading, a part of the brain became dedicated to it.

It seemed to me that both Feldman Barrett and Saxe could be right: emotions could trigger places across our brains, while specific things like eyesight and motor coordination are rooted in one place.

It’s no surprise scholars disagree. Saxe told us how there’s heated debate in the academic world about why human three year olds usually fail the False Belief test, where we expect other people know what we know about an event, and why five year olds usually pass this test [here is a link to the portion of Saxe’s TED talk on the False Belief test, which she also showed us].

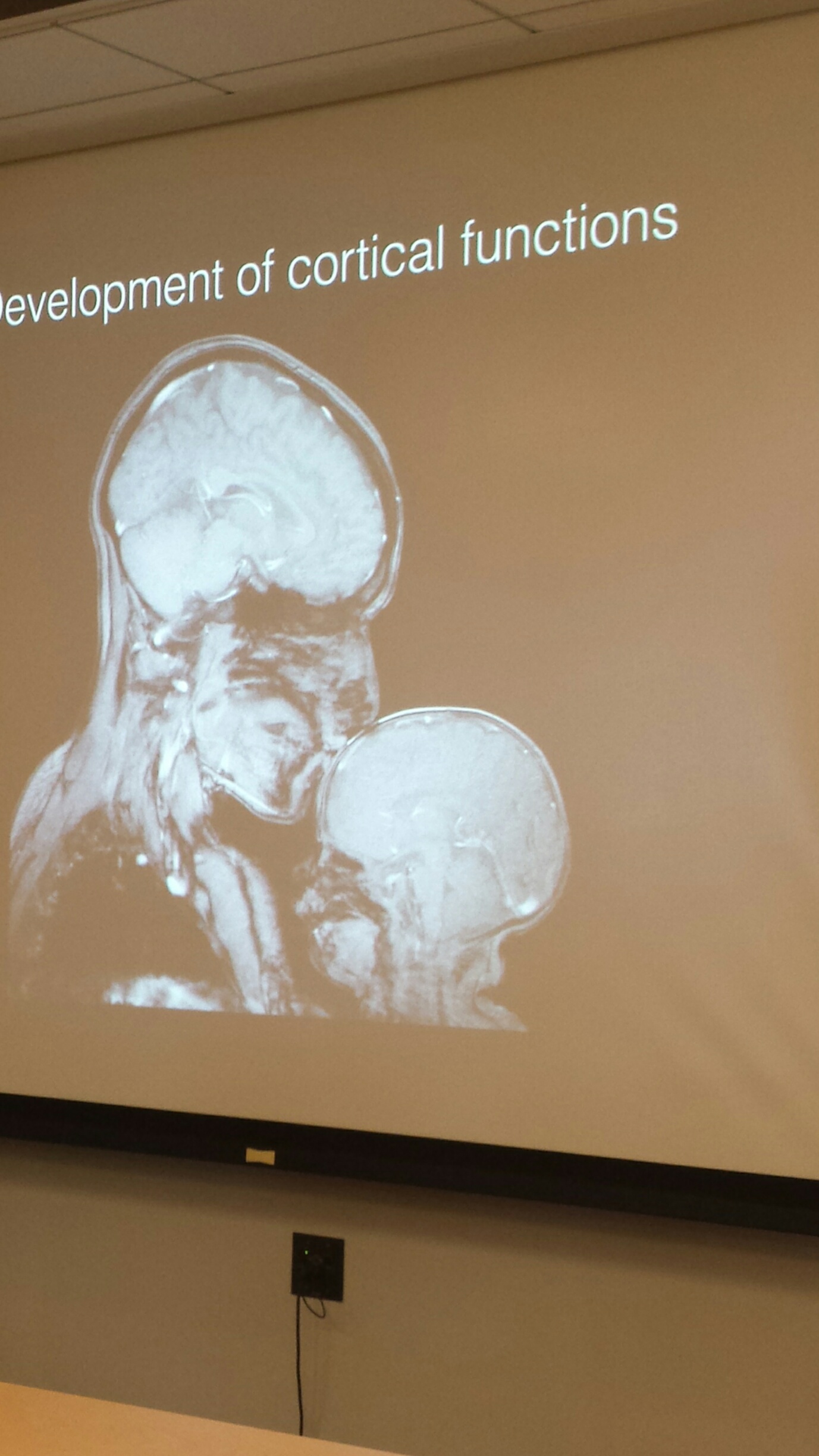

Maybe it’s the stage where a new evolutionary development takes place in our brain, or perhaps it’s just a very difficult task and we need time to learn it. This dispute is partly why Saxe studies cortical development. It interests her, she told us, because “it’s the part of the brain that changes the most evolutionarily, changes the most after the birth of a child and it’s the most disputed part of the brain about where its organization comes from.”

I will note, anecdotally, that people can continue to fail the False Belief test even as adults. Game theory banks on people developing false beliefs. My spouse and I often think the other knows what we know about some event involving the kids, for instance, but of course we do not.

Clearly, something happens in the brain between three and five for us to develop a theory of mind. But Saxe said it is difficult for neuroscientists to test hypotheses about the neuro underpinnings of theory of mind, because three-year-olds can’t read and can’t lie still for the 15 or more minutes needed to make a usable brain scan.

I liked a side path Saxe took about the crisis in FMRI. FMRI works by averaging the flow of blood across regions of the brain. It does not give you specific reads on a specific place in the brain. You could not look at a specific voxel, an area of about a 100,000 neurons. But then multivoxel analysis was developed, which let scientist look at the patterns in the brain more precisely. They could see differences in the patterns of blood flow activation in the brain. Saxe says this is giving a greater ability to see what is happening in the brain, and has salvaged FMRI technology.

Even so, Saxe let slip that this stuff is just “better than chance,” which I found a weak base for drawing important conclusions. Still, it has led to a study she submitted just last month that showed we can use scanners as effectively as regular psychological tests to distinguish between justified and unjustified beliefs in young children, the first time that MVPA has been applied to kids, she claimed (in part because kids are so hard to scan).

Saxe and child scanned…

Saxe and child scanned…Saxe said it was the first study that features adolescents being scanned. Afterwards, some of the gathered journalists expressed skepticism that she’s actually been able to get adolescents to lay in a scanner without moving for the hour and a quarter she says she needed. But Saxe swears it’s true, showed an image of kids being trained in play scanners, and described her methods for getting them to lay still, including having a dog for them to pet and a person constantly in attendance. It will be interesting to see if someone can replicate this study.

It should be easier to replicate the work of our second speaker on day two, Josh Hartshorne, a young cognitive psychologist now teaching at Boston College.

Hartshorne uses massive online surveys to study how the brain develops over time, and he’s finding a different pattern than past research would suggest. Traditionally we expect to see a youthful peak across a wide range of brain functions and then a slow decline. That tradition is based on a slim set of data, most of which is not publicly available.

Hartshorne says the new data suggests a different range. For some things, it is true that our processing speed peaks young and then declines. This is most pronounced for digit symbols and for arranging pictures, both of which are measures of how fast one thinks. We peak at names between 20 and 24 (I remember amusing myself at parties by showing how many names I could remember after having met them just once, and reaching well past a dozen. I no longer would do well at that game.). But for a similar task, remembering faces, our peak is older, at 30 to 31. That is an odd disparity, Hartshorne says. Odder still is vocabulary. We continue learning new words into our 40s, say some studies, and into our early 50s, say others.

Hartshorne also talked about the Flynn effect – it surprised him that almost none of us knew what it was. Flynn noticed that IQ tests have to be renormed every 20 years or so, as average scores consistently rise above 100. Are we actually getting smarter generation by generation? Perhaps not – IQ tests measure only facets of intelligence. But obviously we get better at IQ tests. Some of that may reflect a culture becoming more scientific, since IQ tests measure what people need to be good at science, not general intellect. Hartshorne’s assertion that for several centuries western cultures have been moving more towards scientific thinking feels broadly correct, given the Enlightenment and its descendants, the Industrial and Information Revolutions.

Even in the era of television, loathed by intellectuals for its passivity, we see a smartening. Hartshorne pointed to the seminal sitcom, I Love Lucy, which focused for 30 minutes on the development of one plot. There was no subplot , no other threads. Now we expect three to four plots in a 30-minute show (it could be that TV writers have just gotten better at reflecting what we’ve always been good at).

One important thing the Flynn effect suggests: the brain can be trained, over time. That may mean that brain games might actually work to make our brains better. It also might bode well for the re-skilling we’re going to need as our existing jobs morph and disappear.

At least, that’s the hope. As Hartshorne put it, “I can’t tell you the new theory, we don’t know. This is one of the most exciting times to be a scientist, when old paradigms fall apart and something’s coming and we don’t know what it is.” If he’s right, history books will point back.

Hartshorne reminded us that the goal of scientists is not to be right, it’s to be less wrong. That is a good thing for journalist to keep in mind, but runs exactly counter to our typical story line, at least in technology journalism.

After lunch is the worst time to speak, but our post-lunch speaker, Bobby Kasthuri, was such a showman nobody would fall asleep. He has been mapping the brain. To be specific, he has built a map of about 1 billionth of a mouse brain, a nanometer by a nanometer by a nanometer. Still, it’s a start, and some day that may help us understand a good deal more of the way the brain works.

Kasthuri was endearingly outlandish. “We are post-genomic compared to animals,” he said, meaning we can grow and think of things that he said are surely beyond our genome. He said this was possibly also true for some other animals, potentially primates, that they share information beyond their genomic needs. “For us it seems to be more the rule than the exception. The vast majority of things we do in a day are probably not encoded in our genome,” Kasthuri said.

A big thought. I mentioned this to another speaker later on, who thought it a silly thing for Kasthuri to say. But it was fun to hear and it kept us awake!

Kasthuri is a raging idealist. He quoted John F. Kennedy’s “We choose to go to the moon” speech to describe why he wants to map that other uncharted territory, the brain. He conveniently left out that Kennedy’s soaring rhetoric was largely driven by the need to best the Soviets, not by a desire for knowledge. But if you’re trying to inspire people, best to cite JFK’s loftiness, not his underlying moral cynicism. Coincidentally, I’m reading John Irving’s A Prayer for Owen Meany, and am at the part of the novel where Meany has gone from inspired by Kennedy to disillusioned by Kennedy [warning: Owen Meany speaks and writes in all caps, which becomes normal during a 600-page novel but is jarring otherwise]:

“I THOUGHT KENNEDY WAS A MORALIST. BUT HE WAS JUST GIVING US A SNOW JOB, HE WAS JUST BEING A GOOD SEDUCER. I THOUGHT HE WAS A SAVIOR. I THOUGHT HE WANTED TO USE HIS POWER TO DO GOOD. BUT PEOPLE WILL SAY AND DO ANYTHING JUST TO GET THE POWER, THEN THEY’LL USE THE POWER JUST TO GET A THRILL.’ (pp. 436-437)

Anyway, Kasthuri is probably more of a moralist than Kennedy. Kasthuri is mapping the brain because he thinks, like Freud, that anatomy is destiny. Freud, being Freud, was talking about our genitalia, but Kasthuri is more high-minded. Puns aside, the contention is if we understand the structure of the brain, it will tell us things about who we are and why we do what we do. He introduced us to Santiago Ramon y Cajal, “the Einstein of neuroanatomists.” Cajal was the first to show the brain was not one big cell but made up of many, many cells.

Kasthuri thinks the brain prunes those cells radically over time, one reason why adult brains are so much less plastic than the brains of children. He argued that if he and his pre-teen daughter were dropped into a foreign culture, his daughter would adapt well, he would not.

Kasthuri conjectured that once we can map the brain, we may find that the brains of schizophrenics don’t grow up in the same way as other brains, that they will look a good deal like youth brains. Children, he notes, have imaginary friends and hear voices in their heads, and no one calls them crazy.

But right now, he says, we are pre-Copernican. We don’t have the instruments we need to prove our theories. And we need new instruments to navigate the brain, with its 100 trillion neurons and 1 quadrillion connections. That’s 10 times the stars in the Milky Way galaxy. By the way, the map of the brain he hopes to make will be 1 zettabyte, or 1 billion gigabytes of data. For comparison, through 1999, the sum of all information produced by humans was about .012 zettabytes. he’s hoping to start a brain observatory that anyone can apply to use, like astrophysicists and telescopes.

The final speaker was Van Wedeen, a Harvard radiologist. Wedeen says the structure of the brain is the least well understood part of the body. “The shoulder joint, the eyeball, the wing of a bird, have wonderful engineering explanations. For the brain it appears we almost don’t know where to start.” he said.

Wedeen paid us the ultimate compliment of talking to us like we were advanced medical researchers. That meant a huge portion of his talk was above my head (a cliché, true, but it just means things couldn’t sink into my brain).

Wedeen’s own work has shown that the brain has unexpected connections. We would think it would transmit things in waves. Instead, it sends information, signals, along interconnected grids. These grids should not share surfaces; they are three dimensional, yet his work shows they do share two dimensional surfaces.

The rest I can’t really put in context; it is my challenge now to learn things like what he means when he talks about the orthogonal brain, and why humanity’s most important structure seems to communicate so improperly.

The other thing I’m grappling with is how the brain is its own frontier, one we know almost less about than that supposedly final frontier, space. The brain in its way is the last bastion of individualism. Will some future Frederick Jackson Turner find that once we chart it, it hems us in?